Dear english-speaking readers of this blog.

This post is about the anti-acne drug Diane-35 that (with other 3rd and 4th generation combined oral contraceptives (COCs)) has been linked to the deaths of several women in Canada, France and the Netherlands. Since there is a lot of media attention (and panic) in the Netherlands, the remainder of this post is in Dutch. Please write in the comments (or tweet) if you would like me to summarize the health concerns of these COCs in a separate English post.

————————-

Mediaophef

Er is de laatste tijd nogal veel media-aandacht voor Diane-35. Het begon allemaal in Frankrijk, waar de Franse toezichthouder op geneesmiddelen ANSM Diane-35* eind januari van de markt wilde halen omdat in de afgelopen 25 jaar 4 vrouwen na het gebruik ervan waren overleden. In beroep werd dit afgewezen, waarna de ANSM de EMA (European Medicines Agency) verzocht om de veiligheid van Diane en 3e/4e generatie orale combinatie anticonceptiepillen (OAC) nog eens te onderzoeken.

In januari overleed ook een 21-jarige gebruikster van Diane-35 in Nederland. Met terugwerkende kracht ontving het Nederlandse Bijwerkingscentrum Lareb 97 meldingen van bijwerkingen van Diane-35. Hieronder waren 9 sterfgevallen uit 2011 en eerder.** Overigens werden ook sterfgevallen gemeld van vrouwen die vergelijkbare (3e en 4e generatie) orale anticonceptiemiddelen hadden gebruikt, zoals Yasmin.

Alle vrouwen zijn overleden aan bloedproppen in bloedvaten (trombose dan wel longembolie). Totaal waren er 89 meldingen van bloedstolsels, wat altijd (ook zonder dodelijke afloop) een ernstige bijwerking is.

Aanleiding voor Canada en België, om ook in de statistieken te duiken. In Canada bleken sinds 2000 11 vrouwen die Diane-35 slikten te zijn overleden en in België zijn er sinds 2008 29 meldingen van trombose door het gebruik van de 3e/4e generatiepil (5 door Diane-35, geen doden)

Dit nieuws sloeg in als een bom. Veel mensen raakten in paniek. Of zijn boos op Bayer*, het CBG (College ter Beoordeling van Geneesmiddelen) en/of minister Schippers die het naar hun idee laten afweten. Op Twitter zie ik aan de lopende band tweets voorbijkomen als:

“Diane-35 pil: heet deze zo omdat je er niet altijd de 35 jaar mee haalt?“.

“Tonnen #rundvlees halen we uit de handel om wat #paardenvlees. Maar doden door de Diane 35 #pil doet de regering niks mee.

Oud Nieuws

Dergelijke reacties zijn sterk overdreven. Er is absoluut geen reden tot paniek.

Echter waar rook is, is vuur. Ook al gaat het hier om een klein brandje.

Maar wat betreft Diane-35 is die rook er al jaren…. Waarom roept men nu ineens: “Brand!”?

De meeste sterfgevallen zijn van vòòr dit jaar. Dat de Fransen authoriteiten nu zoveel daadkracht tonen komt waarschijnlijk omdat hen laksheid verweten werd bij recente schandalen met PIP-borstimplantaten en Mediator, dat meer dan 500 sterfgevallen veroorzaakt heeft. [Reuters, zie ook het blog van Henk Jan Out]

Verder was allang bekend dat Diane-35 de kans op bloedstolsels verhoogde.

Niet alleen Diane-35

Men kan de risico’s van Diane-35 niet los zien van de risico’s van orale anticonceptiemiddelen (OAC’s) in het algemeen.

Diane-35 lijkt qua samenstelling erg op de 3e generatie OAC. Het is echter uniek omdat het in plaats van een 3e generatie progestogeen cyproteronacetaat bevat. ‘De pil’ bevat levonorgestrel, dit is een 2e generatie progestogeen. Al de OAC combinatiepillen bevatten daarnaast (tegenwoordig) een lage dosering ethinylestradiol.

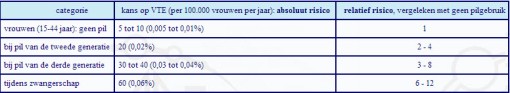

Zoals gezegd, is al jaren bekend dat alle OAC’s, dus ook ‘de pil’, de kans op bloedstolsels in bloedvaten licht verhogen[1,2,3]. Op zijn hoogst verhogen 2e generatie OAC’s (met levonorgestrel) die kans met een factor 4. Derde generatie pillen lijken die kans verder te verhogen. Met hoeveel precies, daarover verschillen de meningen. Voor wat beteft Diane-35, ziet de een géén tot nauwelijks effect [4], de ander een 1,5 [5] tot 2 x [3] sterker effect. Het totaalplaatje ziet er ongeveer als volgt uit:

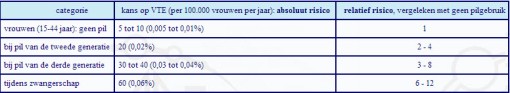

Absolute en relatieve kans op VTE (Veneuze trombo-embolie).

Uit: http://www.anticonceptie-online.nl/pil.htm

Risico’s in Perspectief

Een 1,5-2 x groter risico vergeleken met de “gewone pil”, lijkt een enorm groot risico. Dit zou ook een groot effect zijn als trombo-embolie vaak voorkwam. Stel dat 1 op de 100 mensen trombose krijgt per jaar, dan zouden op jaarbasis 2-4 op de 100 mensen trombose krijgen na de ‘pil’ en 3-8 mensen na Diane-35 of een 3e of 4e generatiepil. Dit is een groot absoluut risico. Dat risico zou je normaal niet nemen.

Maar trombo-embolie is zeldzaam. Het komt voor bij 5-10 op de 100.000 vrouwen per jaar. En totaal zal 1 op miljoen vrouwen daaraan overlijden. Dat is een heel minieme kans.Vier tot zes keer een kans van iets meer dan 0 blijft een kans van bijna 0. Dus in absolute zin, brengen Diane-35 en OAC’s weinig risico met zich mee.

Daarbij komt dat trombose niet direct door de pil veroorzaakt hoeft te zijn. Roken, leeftijd, (over)gewicht, erfelijke aanleg voor stollingsproblemen kunnen ook een (grote) rol spelen. Verder kunnen deze factoren samenspelen. Om deze reden worden OAC’s (ook de pil) afgeraden aan risicogroepen (oudere vrouwen die veel roken, aanleg voor trombose e.d.)

Het aantal bijwerkingen, dat mogelijk samenhangt met het gebruik van Diane-35, geeft eigenlijk aan dat dit een relatief veilig middel is.

Aanvaardbaar risico?

Om het nog meer in perspectief te plaatsen: zwangerschap geeft een 2x hoger risico op trombose dan Diane-35, en in de 12 weken na de bevalling is de kans nog weer 4-8 keer hoger dan in de zwangerschap (FDA). Toch zullen vrouwen het daarvoor niet laten om zwanger te worden. Het krijgen van een kind weegt meestal (impliciet) op tegen(kleine) risico’s (waarvan trombose er één is).

Men kan de (kans op) risico’s dus niet los zien van de voordelen. Als het voordeel hoog is zal men zelfs een zeker risico op de koop toe willen nemen (afhankelijk van de ernst van de aandoening en eht nut). Aan de andere kant wil je zelfs een heel klein risico niet lopen, als je geen baat hebt bij een middel of als er even goede, maar veiliger middelen zijn.

Maar mag de patiënte die overweging niet zelf met haar arts maken?

Geen plaats voor Diane-35 als anticonceptiemiddel

Diane-35 heeft een anticonceptiewerking, maar het is hiervoor niet (langer) geregistreerd. De laatste anticonceptie-richtlijn van de nederlandse huisartsen (NHG) uit 2010 [6] zegt expliciet dat er geen plaats meer is voor de pil met cyproteronacetaat. Dit omdat de gewone ‘pil’ even goed zwangerschap voorkomt én (iets) minder kans geeft op trombose als bijwerking. Dus waarom zou je een een potentieel hoger risico lopen, als dat niet nodig is? Helaas is de NHG-standaard minder expliciet over 2e en 3e generatie OAC’s.

In andere landen denkt men vaak net zo (In de VS is Diane-35 echter niet geregistreerd).

Dit zegt bijv de RCOG (Royal College of Obstetricians and Gynaecologist, UK) in hun evidence-based richtlijn die specifiek gaat over OAC’s en kans op trombose [1]

Diane-35 als middel tegen ernstige acne en overbeharing.

Omdat het cyproteron acetaat in Diane-35 een sterk anti-androgene werking heeft kan het worden ingezet bij ernstige acné en overbeharing (dat laatste met name bij vrouwen met PCOS, een gynecologische aandoening). Desgewenst kan het dan tevens dienst doen als anticonceptiemiddel: 2 vliegen in één klap.

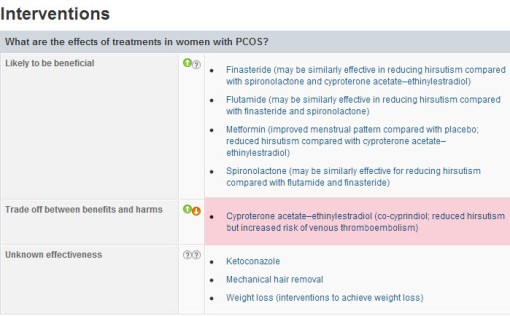

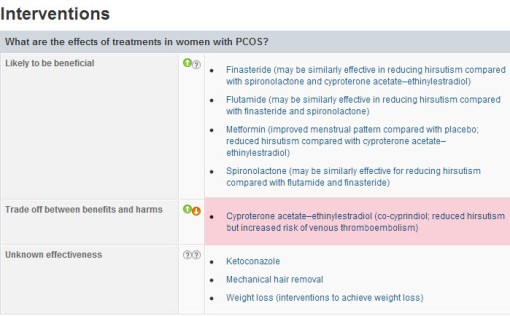

Clinical Evidence, dat een heel mooie evidence based bron is die voor-en nadelen van behandelingen tegen elkaar afzet, concludeert dat middelen met cyproteron acetaat ondanks hun prima werking, bij ernstige overbeharing (bij PCOS) niet de voorkeur verdienen boven middelen als metformine. Het risico op trombose is in deze overweging meegenomen.[7]

Volgens een Cochrane Systematisch Review hielpen alle OAC’s wel bij acné, maar OAC’s met cyproteron leken wat effectiever dan pillen met 2e of 3e generatie progestogeen. De resultaten waren echter tegenstrijdig en de studies niet zo erg sterk.[8]

Sommigen concluderen op basis van dit Cochrane Review dat alle OAC’s even goed helpen en dat de gewone pil dus voorkeur verdient (zie bijv. dit recente artikel van Helmerhorst in de BMJ [2], en de NHG standaard acne [9]

Maar in de meest recente Richtlijn Acneïforme Dermatosen [10] van de Nederlandse Vereniging voor Dermatologie en Venereologie (NVDV) wordt er op basis van dezelfde evidence iets anders geconcludeerd:

De Nederlandse dermatologen komen dus met een positieve aanbeveling van Diane-35 ten opzichte van andere anticonceptiemiddelen bij vrouwen die ook anticonceptie wensen. Nergens in deze richtlijn wordt expliciet gerefereerd aan trombose als mogelijke bijwerking.

Het voorschrijfbeleid in de praktijk.

Als Diane-35 niet als anticonceptiemiddel voorgeschreven wordt, en het wordt slechts bij ernstige vormen van acne of overbeharing gebruikt, hoe kan dit middel met een zo’n laag risico dan zo’n omvangrijk probleem worden? De doelgroep èn de kans op bijwerkingen is immers heel klein. En hoe zit het met 3e en 4e generatie OAC’s die niet eens bij acné voorgeschreven zullen worden? Daar zou de doelgroep nog kleiner moeten zijn.

De realiteit is dat de omvang van het probleem niet zozeer door het on-label gebruik komt maar, zoals Janine Budding al aangaf op haar blog Medical Facts door off-label voorschrijfgedrag, dus voor een andere indicatie dan waarvoor het geneesmiddel is geregistreerd. In Frankrijk gebruikt de helft van de vrouwen die OAC’s gebruiken, de 3e en 4e generatie OAC: dat is ronduit buitensporig, en niet volgens de richtlijnen.

In Nederland slikkten ruim 161.000 vrouwen Diane-35 of een generieke variant met exact dezelfde werking. Ook veel Nederlandse en Canadese gebruiken Diane-35 en andere 3e en 4e generatie OAC’s puur anticonceptiemiddel. Voor een deel, omdat sommige huisartsen het ‘in de pen’ hebben of denken dat een meisje dan gelijk van haar puistjes afgeholpen is. Voor een deel omdat, in Nederland en Frankrijk, Diane-35 vergoed wordt en de gewone pil niet. Er is, zeker in Frankrijk, een run op een ‘gratis’ pil.

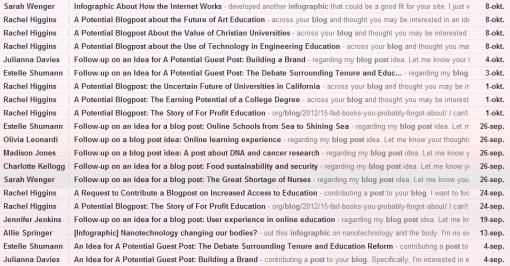

Online bedrijven spelen mogelijk ook een rol. Deze lichten vaak niet goed voor. Eén zo’n bedrijf (met gebrekkige info over Diane op hun website) gaat zelfs zover het twitter account @diane35nieuws te creeeren als dekmantel voor online pillenverkoop.

Wat nu?

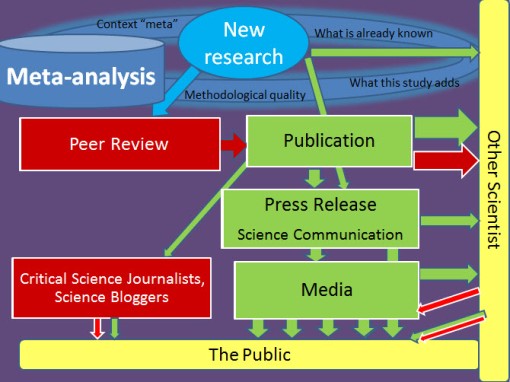

Hoewel de risico’s van Diane-35 allang bekend waren en gering lijken te zijn, en bovendien vergelijkbaar met die van de 3e en 4e generatie OAC’s, is er een massaal verzet tegen Diane-35 op gang gekomen, die niet meer te stuiten lijkt. Niet de experts, maar de media en de politiek lijken de discussie te voeren.Erg verwarrend en soms misleidend voor de patiënt.

Mijn inziens is het besluit van de Nederlandse huisartsen, gynecologen en recent ook de dermatologen om Diane-35 voorlopig niet voor te schrijven aan nieuwe patiënten tot de autoriteiten een uitspraak hebben gedaan over de veiligheid***, gezien de huidige onrust, een verstandige.

Wat niet verstandig is om zomaar met de Diane-35 pil te stoppen. Overleg altijd eerst met uw arts wat voor u de beste optie is.

In 1995 heeft een vergelijkbare reactie op waarschuwingen over de tromboserisico’s van bepaalde OAC’s geleid tot een ware “pil scare”: vrouwen gingen massaal over op een andere pil of stopten er in het geheel mee. Gevolg: een piek aan ongewenste zwangerschappen (met overigens een veel hogere kans op trombose) en abortussen. Conclusie destijds [10]:

“The level of risk should, in future, be more carefully assessed and advice more carefully presented in the interests of public health.”

Kennelijk is deze les aan Nederland en Frankrijk voorbijgegaan.

Hoewel ik denk dat Diane-35 maar voor een beperkte groep echt zinvol is boven de bestaande middelen, is het te betreuren dat op basis van ongefundeerde reacties, patiënten straks mogelijk zelf geen keuzevrijheid meer hebben. Mogen zij zelf de balans tussen voor-en nadelen bepalen?

Het is begrijpelijk (maar misschien niet zo heel professioneel), dat dermatologen nogal gefrustreerd reageren, nu een bepaalde groep patienten tussen wal en schip raakt. Tevens moet men niet op basis van evidence en argumenten, maar onder druk van media en politiek, tot een ander beleid overgaan.

Want laten we wel wezen, sommige dermatologische en gynecologische patiënten hebben wel baat bij Diane-35.

en

En tot slot een prachtige reactie van een acne-patiënte op een blog post van Ivan Wolfers. Zij vat de essentie in enkele zinnen samen. Net als bovenstaande dames, een patient die zeer weloverwogen met haar arts beslissingen neemt op basis van de bestaande info.

Zoals het zou moeten…

Noten

* Diane-35 wordt geproduceerd door Bayer. Het staat ook bekend als Minerva, Elisa en in buitenland bijvoorbeels als Dianette. Er zijn verder ook veel merkloze preparaten met dezelfde samenstelling.

**Inmiddels heeft zijn er nog 4 dodelijke gevallen na gebruik van Diane-35 in Nederland bijgekomen (Artsennet, 2013-03-11)

***Hopelijk wordt de gewone pil dan ook in de vergelijking meegenomen. Dit is wel zo eerlijk: het gaat immers om een vergelijking.

Referenties

- Venous Thromboembolism and Hormone Replacement Therapy – Green-top Guide line 40 (2010) Royal College of Obstetricians and Gynaecologists,

2011

- Helmerhorst F.M. & Rosendaal F.R. (2013). Is an EMA review on hormonal contraception and thrombosis needed?, BMJ (Clinical research ed.), PMID: 23471363

- van Hylckama Vlieg A., Helmerhorst F.M., Vandenbroucke J.P., Doggen C.J.M. & Rosendaal F.R. (2009). The venous thrombotic risk of oral contraceptives, effects of oestrogen dose and progestogen type: results of the MEGA case-control study., BMJ (Clinical research ed.), PMID: 19679614

- Spitzer W.O. (2003) Cyproterone acetate with ethinylestradiol as a risk factor for venous thromboembolism: an epidemiological evaluation., Journal of obstetrics and gynaecology Canada : JOGC = Journal d’obstétrique et gynécologie du Canada : JOGC, PMID: 14663535

- Martínez F., Ramírez I., Pérez-Campos E., Latorre K. & Lete I. (2012) Venous and pulmonary thromboembolism and combined hormonal contraceptives. Systematic review and meta-analysis., The European journal of contraception & reproductive health care : the official journal of the European Society of Contraception, PMID: 22239262

-

NHG-Standaard Anticonceptie 2010 Anke Brand, Anita Bruinsma, Kitty van Groeningen, Sandra Kalmijn, Ineke Kardolus, Monique Peerden, Rob Smeenk, Suzy de Swart, Miranda Kurver, Lex Goudswaard.

- Cahill D. (2009). PCOS., Clinical evidence, PMID: 19445767

- Arowojolu A.O., Gallo M.F., Lopez L.M. & Grimes D.A. (2012). Combined oral contraceptive pills for treatment of acne., Cochrane database of systematic reviews (Online), PMID: 22786490

- Kertzman M.G.M., Smeets J.G.E., Boukes F.S. & Goudswaard A.N. [Summary of the practice guideline ‘Acne’ (second revision) from the Dutch College of General Practitioners]., Nederlands tijdschrift voor geneeskunde, PMID: 18590061

- Richtlijn Acneïforme Dermatosen, © 2010, Nederlandse Vereniging voor Dermatologie en Venereologie (NVDV)

- Furedi A. The public health implications of the 1995 ‘pill scare’., Human reproduction update, PMID: 10652971

Recent Comments